Traditional Business Rules vs. Predictive Software Features

I spent nearly half of my career writing requirements and developing and testing software the traditional way....

|

CONSTRUCTION & REAL ESTATE

|

|

|

Discover how crafting a robust AI data strategy identifies high-value opportunities. Learn how Ryan Companies used AI to enhance efficiency and innovation.

|

| Read the Case Study ⇢ |

|

LEGAL SERVICES

|

|

|

Discover how a global law firm uses intelligent automation to enhance client services. Learn how AI improves efficiency, document processing, and client satisfaction.

|

| Read the Case Study ⇢ |

|

HEALTHCARE

|

|

|

A startup in digital health trained a risk model to open up a robust, precise, and scalable processing pipeline so providers could move faster, and patients could move with confidence after spinal surgery.

|

| Read the Case Study ⇢ |

|

LEGAL SERVICES

|

|

|

Learn how Synaptiq helped a law firm cut down on administrative hours during a document migration project.

|

| Read the Case Study ⇢ |

|

GOVERNMENT/LEGAL SERVICES

|

|

|

Learn how Synaptiq helped a government law firm build an AI product to streamline client experiences.

|

| Read the Case Study ⇢ |

|

|

Mushrooms, Goats, and Machine Learning: What do they all have in common? You may never know unless you get started exploring the fundamentals of Machine Learning with Dr. Tim Oates, Synaptiq's Chief Data Scientist. You can read and visualize his new book in Python, tinker with inputs, and practice machine learning techniques for free. |

| Start Chapter 1 Now ⇢ |

By: Lauren Haines 1 Dec 14, 2023 2:00:00 PM

Predictive maintenance empowers manufacturers to anticipate and take action to address equipment failures before they occur. This proactive approach minimizes downtime, extends the equipment lifecycle, and streamlines maintenance scheduling. Put another way, it offers a competitive edge in an industry where every second and penny counts, propelling innovative manufacturers ahead of their peers.

Predictive maintenance involves models that learn from data to predict imminent equipment failures. Gradient descent is an optimization algorithm used in statistics and machine learning to find the best parameters (weights) for such a model. It iteratively adjusts the model parameters until it minimizes the model’s error, like a marble rolling down the side of a mixing bowl until it settles at the lowest point.

Let’s explore how manufacturers can use gradient descent to their strategic advantage with a practical business case: building a predictive maintenance model for manufacturing equipment.

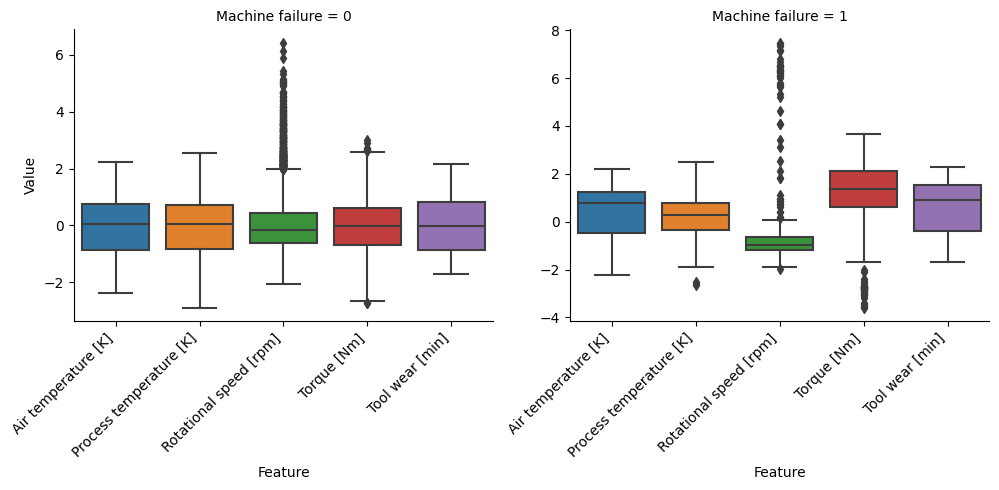

The first step to building a predictive maintenance model is sourcing data to train it. We acquired an open-source, synthetic data set modeled after an existing milling machine to simulate realistic equipment records for a fictitious manufacturing firm, "MakerSpace." The box plots below show the distribution and variation of five machine conditions, or features, in the synthetic data set: Air Temperature [K], Process Temperature [K], Rotational Speed [rpm], Torque [Nm], and Tool Wear [min]. The box plots marked “Machine failure = 0” show the distribution and variation of these features during normal operation. Those marked “Machine failure = 1” show these features’ distribution and variation during instances of machine failure, when maintenance was required.

Note: We normalized the features prior to visualization — a process that ensures that each feature is considered on the same scale. This is important because these features have different units and ranges; for instance, rotational speed in rpm is naturally on a different scale than temperature in Kelvin (K).

The box plots reveal differences in the distribution and variance of the five features during normal machine operation and machine failure. For example, the spread of Torque is significantly wider during machine failure than during normal operations, suggesting a correlation between extreme torque and machine failure. A predictive maintenance model can learn to recognize these deviations and predict an imminent machine failure.

Let’s construct a model for MakerSpace, so it can start practicing predictive maintenance. The first step is to split our data set into training data (80 percent of the data) and test data (20 percent of the data).

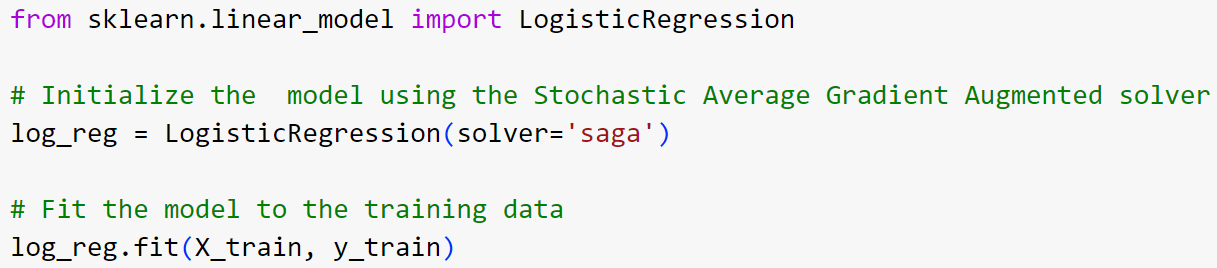

We perform logistic regression on our training data to model the relationship between our five features and machine failure. Logistic regression is a statistical method well-suited for binary classification tasks, such as predicting whether a machine will fail or not. It estimates the probability of machine failure given the features.

We rely on gradient descent to fine-tune our model’s parameters to minimize the prediction error — a measure of how often and to what degree the model’s predictions are wrong. This optimization process involves iteratively adjusting the weights of each feature based on their impact on the model's accuracy. For example, if high Torque readings more often lead to failures than high Rotational Speed readings, gradient descent adjusts the model accordingly, giving more weight to Torque than Rotational Speed.

Note: Below is the Python code we used to construct our regression model. The “solver” argument in the “LogisticRegression” function is set to “saga,” a.k.a. Stochastic Average Gradient Augmented. This argument tells the function to use gradient descent rather than an alternative optimization algorithm.

The mathematical foundations of gradient descent are advanced and beyond the scope of this short blog post. Our Chief Data Scientist wrote a chapter on the subject, which you access through the button below. But for our purposes, it’s sufficient to understand that gradient descent is essentially the mechanism by which our model “learns” from its training data to estimate the probability of machine failure as accurately as possible.

We evaluate our model’s performance by asking it to classify our test data. This approach allows us to assess how well the model responds to new data (as opposed to the training data we’ve already given it).

A helpful tool for evaluating model performance in a classification task like predicting machine failure is the confusion matrix: a table that visualizes the performance of a classification model on a set of test data. The confusion matrix below captures our model’s performance in predicting machine failure.

-1.png?width=658&height=547&name=download%20(1)-1.png)

Here is a simplified breakdown of the model evaluation results:

True Negatives: The model correctly classified all 1740 test data points representing cases where the machine did not fail (“Machine Failure: 0”). This performance suggests that our model can reliably recognize the conditions that indicate normal machine operation.

True Positives: The model also correctly classified all 60 test data points representing cases where the machine did fail (“Machine Failure: 1”). This performance suggests that our model can reliably recognize the conditions that indicate machine failure.

MakerSpace will be pleased with these results, which suggest the following about our model:

High Accuracy: The model has successfully classified all test cases correctly, indicating very high accuracy. This is ideal for MakerSpace, as it would consider avoiding both false alarms (false positives) and not missing actual failures (false negatives) to be important.

Potential for Real-World Application: Given its performance on the test data, our model shows great promise for implementation in a real-world manufacturing environment. MakerSpace can confidently move forward with using it as the foundation for a predictive maintenance system.

Note: Perfect accuracy is unlikely in real-world applications. It's crucial to ensure that the training and test data are representative of real-world conditions and that a model is not overfitting to the training data. Continuous testing and refinement with different data sets are recommended to ensure good model performance.

This business case showcases the immense potential and strategic value of incorporating advanced statistical methods and machine learning algorithms like gradient descent into manufacturing processes. As the industry progresses, the application of techniques like logistic regression with gradient descent will become increasingly integral, driving the next wave of industrial innovation and operational excellence.

Beyond its use in manufacturing, gradient descent has valuable applications in numerous other industries. In financial services, it optimizes predictive models for risk assessment and stock price prediction, while in healthcare, it fine-tunes predictive models for accurate disease diagnosis. This versatility makes gradient descent a powerful tool for leveraging data to make predictions, uncover patterns, and improve outcomes across industries.

Photo by Christopher Burns on Unsplash

Synaptiq is an AI and data science consultancy based in Portland, Oregon. We collaborate with our clients to develop human-centered products and solutions. We uphold a strong commitment to ethics and innovation.

Contact us if you have a problem to solve, a process to refine, or a question to ask.

You can learn more about our story through our past projects, our blog, or our podcast.

I spent nearly half of my career writing requirements and developing and testing software the traditional way....

April 8, 2025

If you don’t take a TOP DOWN and BOTTOM UP approach to design user-facing AI software, you’ll suffer the consequences...

April 8, 2025

I regularly meet executives that are pondering what to do with AI.

Typical responses I hear are:

“Clearly it’s...

April 8, 2025